Fixing the Rails 7+ networking stack

Let’s talk networking stacks. Which, I know, boring, but it’s foundational!

The problem and general stack

I want Little CRM to be as resilient as possible, which means making it progressively enhance through as much of the gamut as possible:

- No JS loaded.

- JS is partially loaded (but broken).

- JS is loaded, but Websockets are busted.

- Websockets are functional, giving us real-time updates.

Given this, the network stack needs the following:

- Support for standard HTTP request/responses, even if they’re not ideal. Progressive enhancement is about allowing people to keep using your app if things are non-ideal (but still functional), not a 1:1 replication top-to-bottom.

- A sensible client-side API for handling fetch/AJAX-based requests.

- A lightweight real-time update layer using Websockets, as minimally impactful as possible in both client-side code & the app’s dependence on it.

Generally speaking, the stack looks like this:

- Write the page markup semantically using native form elements & links.

- Use Mrujs to progressively enhance the forms & links that should use fetch/AJAX requests.

- Take advantage of Rails’

respond_tomethod to provide both ideal (fetch/AJAX) and fallback HTML responses with minimal code duplication. - Use CableReady as the response protocol for fetch/AJAX requests (using CableCar), while also using it to broadcast changes using the same payload for both.

- Adding light event handlers in the browser to handle edge cases such as double-prepending & augmenting form submission events to autofocus & reset forms. Thanks to the beauty of Mrujs & CableReady’s designs, only 3 were needed!

The first question is, “Why not Hotwire/Turbo?”. I mean, this is a Rails app, after all!

Turbo adds excessive complexity and reimplements the browser

After using Hotwire/Turbo in its various iterations, observing folks talk about it, and looking at the larger context of the library, the following problems made that not a viable option here.

It reimplements the core of the browser

Turbo relies heavily on DOM morphing, a core tenant being “write your server-rendered pages and let Turbo morph the DOM & browser history for SPA-like responsiveness”. This introduces major & frustrating points of failure for two of the most foundational parts of a web browser.

Trying to sidestep how the browser handles page loading and its history, using morphing and heavily mucking with the History API, introduces a ton of gotchas. When you factor in:

- Browser caching

- HTTP2/3

- Network speeds

- The complexity of browser engines

I think it’s better to have your standard request/response flow-either as standard page navigation or by returning JSON that some lightweight JS handlers can use to update some of the existing DOM. Instead, the Turbo approach tries to programmatically diff the changes and morph the page to match what has changed, then change the browser’s history. It’s the same class of problem as a virtual DOM. Even if you’re using something like morphdom, the principle of how it operates is the same.

While you can opt out of these behaviors, you have to explicitly do so with a boatload of meta tags, configuration, and remembering to do so. Turbo wants to do all this work; it thinks this is the way to handle behavior. Which I disagree with because most of the conversations around Turbo I see outside CodePens & simple examples are seasoned programmers asking why it’s not working as expected. And if the default behavior of a framework as critical as your frontend stack causes that, then maybe its approach is fundamentally flawed.

CableReady does have DOM morphing and browser history manipulation, but they are opt-in. It’s clear when those behaviors occur because you choose to enable them. You only write code for the behaviors you want—not to rip out the behaviors that cause problems.

Turbo Streams are not an easily grokable/adjustable protocol

Turbo Streams are how Turbo allows you to send incremental changes as part of remote form submissions, but they’re difficult to use outside of the very basic cases.

For those who aren’t familiar with them, this is an example of Turbo Streams from the docs:

<turbo-stream action="append" target="messages">

<template>

<div id="message_1">

This div will be appended to the element with the DOM ID "messages".

</div>

</template>

</turbo-stream>

<turbo-stream action="prepend" target="messages">

<template>

<div id="message_1">

This div will be prepended to the element with the DOM ID "messages".

</div>

</template>

</turbo-stream>

<turbo-stream action="replace" target="message_1">

<template>

<div id="message_1">

This div will replace the existing element with the DOM ID "message_1".

</div>

</template>

</turbo-stream>

<turbo-stream action="after" targets="input.invalid_field">

<template>

<!-- The contents of this template will be added after the

all elements that match "inputs.invalid_field". -->

<span>Incorrect</span>

</template>

</turbo-stream>

In essence, there are a series of custom elements that encapsulate the changes to the DOM & browser you want to perform.

I can see how you arrive at this API design! It’s not awful, but it could be so much easier & extendable.

Like CableCar, the protocol for CableReady operations.

Let’s look at a similar payload of CableCar operations:

[

{

"selector": "#messages",

"html": "<div id=\"message_1\">This div will be appended to the element with the DOM ID \"messages\".</div>",

"operation": "append"

},

{

"selector": "#messages",

"html": "<div id=\"message_1\">This div will be prepended to the element with the DOM ID \"messages\".</div>",

"operation": "prepend"

},

{

"selector": "#message_1",

"html": "<div id=\"message_1\">This div will replace the existing element with the DOM ID \"message_1\".</div>",

"operation": "replace"

},

{

"selector": "input.invalid_field",

"select_all": true,

"position": "afterend",

"html": "<span>Incorrect</span>",

"operation": "insertAdjacentHtml"

}

]

See the code that generates this JSON

cable_car.append(

selector: "#messages",

html: render_to_string(partial: "..."", formats: [:html])

)

cable_car.prepend(

selector: "#messages",

html: render_to_string(partial: "..."", formats: [:html])

)

cable_car.replace(

selector: "#message_1",

html: render_to_string(partial: "..."", formats: [:html])

)

cable_car.insert_adjacent_html(

selector: 'input.invalid_field',

select_all: true,

position: 'afterend',

html: '<span>Incorrect</span>'

)

It’s easily understandable; you can explain it in a sentence: there is a JSON array of operations, and you can augment the default behavior with extra JSON data.

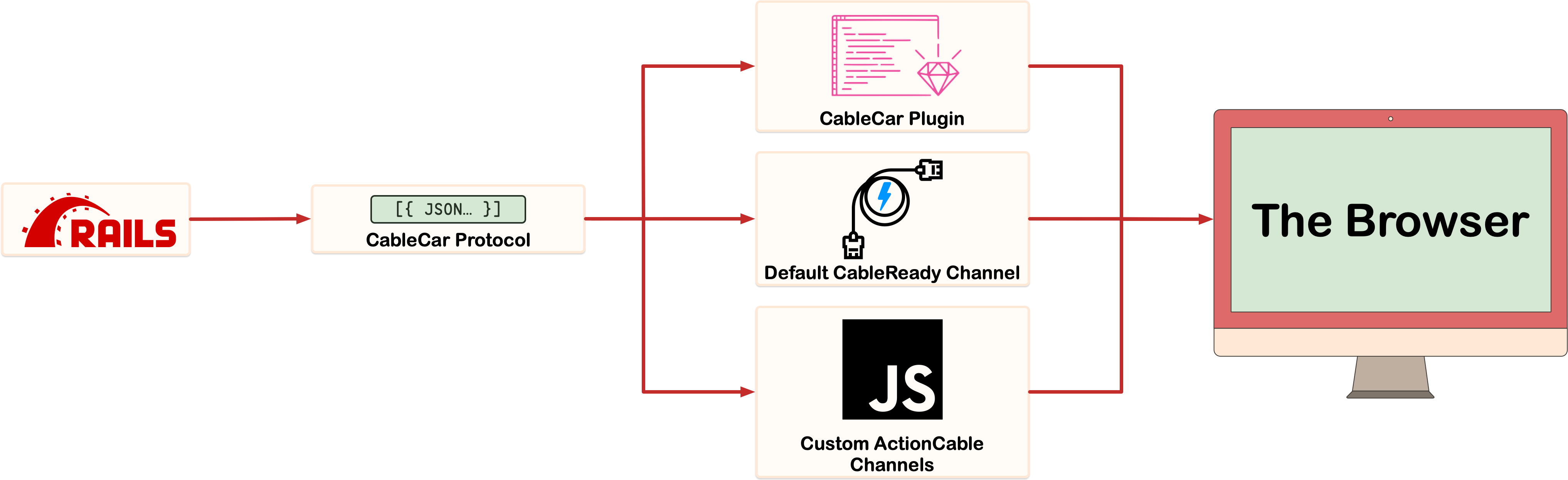

Because it’s an extremely simple protocol, it can be reused across transmission mechanisms with minimal cognitive overhead. In my case, I’m using:

- Mrujs’s CableCar plugin

- CableReady’s default ActionCable interface

- A custom channel I’ve defined

All use the same protocol, avoiding duplicate code & juggling different mental models.

I can’t emphasize enough how useful this is: one protocol, easily understandable, flexible enough to be incorporated into a wide variety of contexts.

One protocol, easily understandable, flexible enough to be incorporated into a wide variety of contexts.

Coming back to that point about augmenting the protocol, I have a concrete example of this: prepend only if a selector is missing.

The use case is straightforward:

- We want people to be able to post to a timeline.

- We want updates to show up immediately on the new timeline.

- We want any real-time updates for new posts added by others or in another tab.

A demo showing off realtime posts via CableCar and CableReady, using the same JSON payload for both

Here's the Microposts Controller

# app/controllers/organizations/microposts_controller.rb

class Organizations::MicropostsController < Organizations::BaseController

include CableReady::Broadcaster

include TimelineEdgeContextLoading

include TimelineEntryCableCar

include FlashMessagesCableCar

# ...

def create

@form = Organization::MicropostForm.new(organization_micropost_params)

@form.save!

message = t('little_crm.microposts.created_message')

flash[:success] = flash_success_with_icon(message: message, icon: helpers.micropost_icon)

organization_micropost = @form.micropost

# Helper method that determines what URL we should redirect to, centralized for easier testing

redirect_url = helpers.url_for_root_context(root_context: @form.creation_postable,

options: {autofocus_micropost: true},

fallback: organization_micropost_url(current_organization,

organization_micropost)

)

respond_to do |format|

format.html { redirect_to redirect_url }

format.cable_ready do

render_flash_messages_to_cable_car_and_clear

edge = @form.micropost&.timeline_node&.outward_edges&.first

if edge.present?

edge_context = Timeline::EdgeContext.new(edge: edge, root_context: @form.creation_postable)

cable_car_add_timeline_entry(timeline_edge_context: edge_context)

end

render(cable_ready: cable_car)

end

end

rescue ActiveRecord::RecordInvalid, ActiveModel::ValidationError

default_respond_to_model_validation_error(html_action: :new, model: @form)

end

# ...

# Only allow a list of trusted parameters through.

def organization_micropost_params

params.fetch(:organization_micropost).permit(

:content

).merge(

current_user: current_user,

current_organization: current_organization,

creation_postable: Organization::Micropost::Postable.fetch_from_sgid(sgid: params.dig(:organization_micropost,

:creation_postable))

)

end

end

The micropost form

# app/views/organizations/microposts/_form.html.erb

<%# locals: (form:, creation_postable: nil, timeline_edge_context: nil) -%>

<%# The `creation_postable` is set to the organization in this example, since we're posting on the Organization's timeline %>

<% input_id = dom_id(form, :content_text_input) %>

<%= application_form_with(model: form,

url: url_for([form.micropost, organization_id: current_organization]),

data: { reset_on_ajax_success: true, focus_on_ajax_success: id_selector(input_id) })

do |f| %>

<!-- ... -->

<% if creation_postable.present? %>

<%= f.hidden_field :creation_postable, value: creation_postable.postable_sgid %>

<% end %>

<% end %>

And some of the concerns to DRY up the code

# app/controllers/concerns/flash_messages_cable_car.rb

module FlashMessagesCableCar

extend ActiveSupport::Concern

def render_flash_messages_to_cable_car_and_clear

cable_car.outer_html(

selector: ".notification-messages",

html: render_to_string(partial: "/layouts/snippets/flash_messages", formats: [:html])

)

flash.delete(:success)

flash.delete(:alert)

flash.delete(:notice)

end

end

# app/controllers/concerns/timeline_entry_cable_car.rb

module TimelineEntryCableCar

extend ActiveSupport::Concern

# ...

def cable_car_add_timeline_entry(timeline_edge_context:)

cable_car.prepend(

**CableCarAndBroadcast::Timeline.prepend_new_edge(timeline_edge_context: timeline_edge_context)

)

end

end

The concern that prepares the CableCar response while also broadcasting the same payload

# app/broadcasters/cable_car_and_broadcast/timeline.rb

module CableCarAndBroadcast::Timeline

extend CableReady::Broadcaster

def self.helpers

ApplicationController.helpers

end

def self.prepend_new_edge(timeline_edge_context:)

html_string = CableCar::TimelineEntryController.render_new_timeline_entry(

timeline_edge_context: timeline_edge_context

)

arguments = {

existing_selector: helpers.id_selector(timeline_edge_context.to_gid_param),

selector: helpers.id_selector(helpers.timeline_dom_id(root_context: timeline_edge_context.root_context)),

html: html_string

}

cableready_stream = cable_ready[TimelineStreamChannel]

cableready_stream.prepend(**arguments)

.broadcast_to(timeline_edge_context.stream)

cableready_stream.broadcast_to(timeline_edge_context.organization_stream)

return arguments

end

# ...

end

# I kept this as a controller so that the mental modeling of Controller => View rendering is retained

# app/controllers/cable_car/timeline_entry_controller.rb

class CableCar::TimelineEntryController < ApplicationController

def self.render_new_timeline_entry(timeline_edge_context:)

renderer.render(

partial: "/timelines/entry",

locals: {

edge: timeline_edge_context.edge,

root_context: timeline_edge_context.root_context

},

formats: [:html],

)

end

# ...

end

We use the same payload to prepend the post in both the AJAX response and the ActionCable broadcast, giving us consistent updates and DRY code. But a double posting problem presented itself.

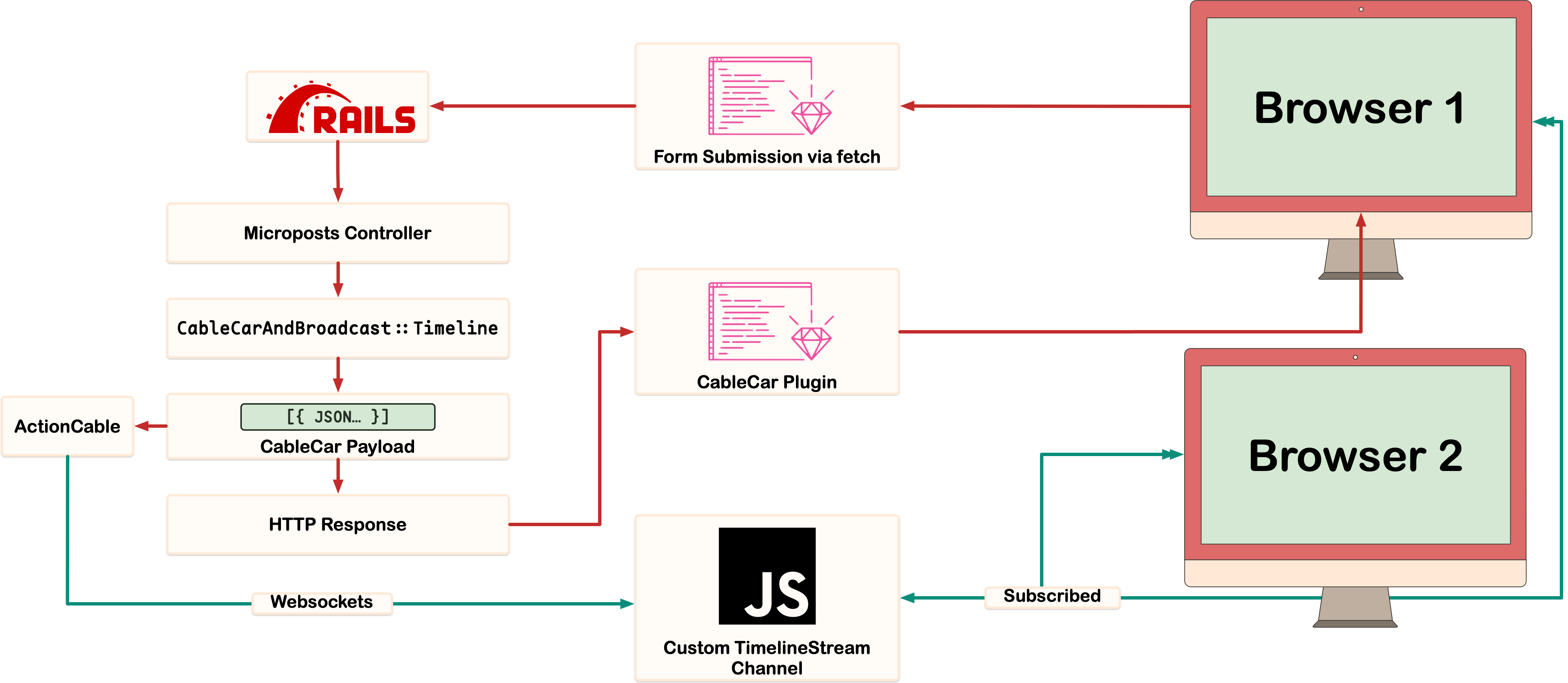

To recap the problem:

- You can post on a timeline, which is done via a fetch request.

- That returns a CableCar set of responses, prepending the post to the timeline.

- But since we also want the timeline to receive real-time updates, the browser will also get the broadcasted update since it’s subscribed to the timeline’s broadcast channel.

Notice how Browser 1 is going to receive both the HTTP response and the websockets broadcast.

So, we need to throw away the prepend operation that comes last (assuming both arrive, which you can’t assume!)

Because CableReady is well-designed, it has events for each stage of the lifecycle for each operation. And because it also gives you the flexibility to add arbitrary data for existing operations, this became a basic fix: a global utility function that is hooked into an event listener:

export async function throwAwayExistingCableReadyPrependEventHandler(event) {

const existingSelector = event?.detail?.existingSelector

if(!existingSelector){return}

if(!document.querySelector(existingSelector)){ return }

event.detail.cancel = true

}

// ...

import { throwAwayExistingCableReadyPrependEventHandler } from 'practical-framework'

// ...

document.addEventListener(`cable-ready:before-prepend`, throwAwayExistingCableReadyPrependEventHandler)

That’s all it took! And the mental model is straightforward.

- Operations are self-contained, so they operate independently.

- Because they’re a common datatype (an array of objects), the idea of a series of lifecycle events that are dispatched is a natural extension.

- Because they are that common, almost primitive datatype, event handlers can easily inspect their details and, well, handle the event!

So with that sprinkling of code, I’ve got real-time updates that can reuse the exact same payloads as the AJAX request/response that is tried and true.

Designing plain HTML fallbacks

Making sure an app is functional when a regular HTTP request is made is a core tenant of progressive enhancement.

We’ve all run into it before—you click a button or submit a form, and either:

- Nothing seems to happen.

- You get a weird JSON payload.

It’s immensely frustrating and a solvable problem!! But at its core, folks are still not designing well enough for the underlying HTML responses.

This comes down to folks throwing up their hands when it comes to progressive enhancement. If a request is made via AJAX, teams don’t make an HTML backup. Developers don’t think about how to design to get “close enough” with just plain requests.

I want to highlight how you can design good HTTP fallbacks, even in a complex set of behaviors.

To reiterate the flows:

- People can post directly to a timeline when viewing it, and they should be able to quickly Post Through It.

- They can also view a post-seeing metadata and connections for it.

- Finally, people can edit the post, either directly from the timeline or when viewing it via the permalink.

A few steps above your standard CRUD responses! Especially when you think about how to get the user efficiently back to where they want to be. But it is solvable, and Rails helps you solve it. Let’s look at the code:

Here's the Microposts Controller again, this time focused on show/edit/update and creation errors

# app/controllers/organizations/microposts_controller.rb

class Organizations::MicropostsController < Organizations::BaseController

include CableReady::Broadcaster

include TimelineEdgeContextLoading

include TimelineEntryCableCar

include FlashMessagesCableCar

# ...

def show

breadcrumb(t('loaf.breadcrumbs.organization_micropost'),

organization_micropost_url(current_organization, @organization_micropost)

)

respond_to do |format|

format.html

format.cable_ready do

render_micropost_show_in_container(organization_micropost: @organization_micropost)

render(cable_ready: cable_car)

end

end

end

def new

@creation_postable = Organization::Micropost::Postable.fetch_from_sgid(sgid: params[:postable_sgid])

breadcrumb :new_organization_micropost,

postable_new_organization_micropost_url(current_organization, params[:postable_sgid])

@form = Organization::MicropostForm.new(

current_organization: current_organization,

current_user: current_user

)

end

def edit

breadcrumb(t('loaf.breadcrumbs.edit_organization_micropost'),

edit_organization_micropost_url(current_organization, @organization_micropost)

)

@form = Organization::MicropostForm.new(

micropost: @organization_micropost,

current_organization: current_organization,

current_user: current_user

)

respond_to do |format|

format.html

format.cable_ready do

cable_car.outer_html(

selector: "[data-micropost-id='#{@organization_micropost.id}']",

html: render_to_string(partial: "form",

locals: { form: @form, timeline_edge_context: @timeline_edge_context },

formats: [:html]

)

)

render(cable_ready: cable_car)

end

end

end

def create

# ...

rescue ActiveRecord::RecordInvalid, ActiveModel::ValidationError

default_respond_to_model_validation_error(html_action: :new, model: @form)

end

def update

@form = Organization::MicropostForm.new(organization_micropost_params.merge(

micropost: @organization_micropost

))

@form.save!

organization_micropost = @form.micropost

updated_message = t('little_crm.microposts.updated_message')

flash[:success] = flash_success_with_icon(message: updated_message, icon: helpers.micropost_icon)

respond_to do |format|

format.html { redirect_to organization_micropost_url(current_organization, organization_micropost) }

format.cable_ready do

render_flash_messages_to_cable_car_and_clear

render_micropost_cable_ready_update

render(cable_ready: cable_car)

end

end

rescue ActiveRecord::RecordInvalid, ActiveModel::ValidationError

default_respond_to_model_validation_error(html_action: :edit, model: @form)

end

# ...

private

def render_micropost_show_in_container(organization_micropost:)

cable_car.inner_html(

selector: "[data-micropost-details-container]",

html: render_to_string(partial: "organizations/microposts/show/post",

locals: { organization_micropost: organization_micropost },

formats: [:html]

)

)

end

def render_micropost_cable_ready_update

if @timeline_edge_context.present?

cable_car_replace_timeline_entry(timeline_edge_context: @timeline_edge_context)

else

render_micropost_show_in_container(organization_micropost: @organization_micropost)

end

end

# ...

end

The Framework-level concern to handle errors in a form submission

module PracticalFramework::Controllers::ErrorResponse

extend ActiveSupport::Concern

def render_json_error(format:, model:)

format.json do

# Renders a custom payload that client-side JS can handle.

# Likely will get refactored to use CableCar, this is pre-CableCar code

errors = PracticalFramework::FormBuilders::Base.build_error_json(model: model, helpers: helpers)

yield(errors) if block_given?

render json: errors, status: :bad_request

end

end

def render_html_error(action:, format:)

format.html do

yield if block_given?

render action, status: :bad_request

end

end

def default_respond_to_model_validation_error(html_action:, model:)

respond_to do |format|

render_json_error(format: format, model: model)

render_html_error(action: html_action, format: format)

end

end

end

The TimelineEntryCableCar concern again

# app/controllers/concerns/timeline_entry_cable_car.rb

module TimelineEntryCableCar

extend ActiveSupport::Concern

# ...

def cable_car_replace_timeline_entry(timeline_edge_context:)

cable_car.outer_html(

**CableCarAndBroadcast::Timeline.replace_timeline_entry(timeline_edge_context: timeline_edge_context)

)

end

end

CableCarAndBroadcast::Timeline, this time for replacing a micropost

# app/broadcasters/cable_car_and_broadcast/timeline.rb

module CableCarAndBroadcast::Timeline

extend CableReady::Broadcaster

def self.helpers

ApplicationController.helpers

end

# ...

def self.replace_timeline_entry(timeline_edge_context:)

html_string = CableCar::TimelineEntryController.render_timeline_entry_component(

timeline_edge_context: timeline_edge_context

)

arguments = {

selector: helpers.id_selector(timeline_edge_context.to_gid_param),

html: html_string

}

cableready_stream = cable_ready[TimelineStreamChannel]

cableready_stream.outer_html(**arguments)

.broadcast_to(timeline_edge_context.stream)

cableready_stream.broadcast_to(timeline_edge_context.organization_stream)

return arguments

end

end

# app/controllers/cable_car/timeline_entry_controller.rb

class CableCar::TimelineEntryController < ApplicationController

# ...

def self.render_timeline_entry_component(timeline_edge_context:)

renderer.render(

partial: "timeline_entry",

locals: {

timeline_edge_context: timeline_edge_context,

},

formats: [:html],

)

end

end

The micropost form

# app/views/organizations/microposts/_form.html.erb

<%# locals: (form:, creation_postable: nil, timeline_edge_context: nil) -%>

<% input_id = dom_id(form, :content_text_input) %>

<%= application_form_with(model: form,

url: url_for([form.micropost, organization_id: current_organization]),

data: { reset_on_ajax_success: true, focus_on_ajax_success: id_selector(input_id) })

do |f| %>

<!-- ... -->

<%= f.fieldset do %>

<!-- ... -->

<%= f.input_wrapper do %>

<%= f.micropost_field(:content, autofocus: params.has_key?(:autofocus_micropost), id: input_id, #...) %>

<section class="cluster-compact justify-space-between">

<%= f.field_errors :content %>

<!-- ... -->

<% end %>

</section>

<% end %>

<%= f.fallback_error_section(options: {id: dom_id(form, :generic_errors)}) %>

<section class="cluster-compact">

<%= render PracticalFramework::Components::StandardButton.new(

title: t("little_crm.microposts.form.submit_button_title"),

html_options: {data: {disable: true}}) #...

%>

<%# The timeline_edge_context is the record that's used to show the timeline, the timeline edges reference microposts, but

are a separate set of models to separate their concerns -%>

<% if timeline_edge_context.present? %>

<%= micropost_cancel_edit_button(formaction: organization_timeline_edge_context_url(edge_context_gid: timeline_edge_context.to_gid)) %>

<%= hidden_field_tag :edge_context_gid, timeline_edge_context.to_gid %>

<% elsif form.persisted? %>

<%# Show the "Nevermind" button when editing a micropost via its permalink %>

<%= micropost_cancel_edit_button(formaction: url_for([form.micropost, organization_id: current_organization])) %>

<% end %>

</section>

<% end %>

<!-- ... -->

<% end %>

A big thing to aim for with your fail-safe fallback is to get something that mostly works and gets the person near the right path. It’s obviously not ideal, but it is there, and it works in an emergency.

Another important note is how errors are handled. This is something I’ve chipped away at for months because we’ve lost the institutional knowledge on how to best do this (and no one’s really codified it).

The controller actions rescue any validation errors that are raised (I’m a huge proponent of using the bang! methods for creation/saving/validation so the control flow is easier). These actions can respond to both:

- A request that accepts JSON data. In this case, the errors are serialized into a format that the client-side JS can use to update the form’s validation state using the constraint validation APIs the browser provides and insert error messages.

- A standard request that accepts HTML. In this case, we re-render the form that currently has the errors, which then renders the errors as part of its markup.

Note that the HTML fallback does not need to be perfect. We just need to make sure the user isn’t unnecessarily blocked.

Let’s look at what happens if there is an error when someone is submitting a post on the organization’s timeline.

When JS is working, the errors are rendered inline. When JS is not working, the browser is redirected to a basic fallback page that renders the form.

Demos showing off how the microposts form works even when JS is disabled

Again, this is not the ideal experience. But it is an experience that allows the person to move forward, do what they need to do.

Admittedly, there are some cases where you do need JS. A concrete example is Stripe’s Payment Elements. In my mind, in those cases, progressive enhancement is rendering an error message directly on the page— something like:

Sorry! We ran into an issue rendering the payment element here. Please try reloading the page, and contact support if you still run into issues!

That’s progressive enhancement because the user knows what happened, what’s going on, and how to resolve it. And it’s infinitely better than a page that’s mysteriously busted or a form that won’t submit and shows no error messages.

You can imagine the way I buried my head in my hands when I demoed this to an acquaintance, and their shitpost response was:

“I didn’t realize you could make usable websites without Javascript”

(Side note: this was the impetus for one of my best shitposts, IMO)

UJS is still a fantastic design, and you should be using Mrujs

A quick history primer: UJS stands for “Unobtrusive Javascript”, and Wikipedia has a great summary & overall page:

Unobtrusive JavaScript is a general approach to the use of client-side JavaScript in web pages so that if JavaScript features are partially or fully absent in a user’s web browser, then the user notices as little as possible any lack of the web page’s JavaScript functionality.

Rails 6 shipped with a library called, shockingly, UJS. Its design was relatively small, but immensely powerful and flexible. By using data attributes & event handlers, you could augment your markup to perform actions if Javascript was enabled. Basic stuff that every web app wants, such as:

- Submitting forms via AJAX.

- Presenting confirmation dialogs.

- Enabling/disabling elements (and changing the inner content if needed).

While UJS unceremoniously got dumped by DHH, Konnor Rogers has written a modern version, Mrujs, and you should absolutely be using it. The UJS design is evergreen because it’s lightweight and leans into the browser’s strengths.

UJS, largely speaking, is focused on augmentation. It operates on the assumption that Javascript will not be available, and that you might not need it in the first place. Which is becoming increasingly true! Look at all the stuff we’ve gotten in Baseline! Unlike Turbo/Hotwire/React/etc., UJS is designed to take up as little space as possible, defer to the browser, and automatically have its foot out the exit door when it knows it’s not needed. Why would you waste bandwidth on a framework that browsers don’t need?

The other browser strength that UJS rests on is heavy usage of event dispatching. The browser is a machine that spits out events and can handle those events; it’s really good at it, too! The original UJS library wasn’t as good as I’d like about its event handling (or even documenting it), but Mrujs is.

Mrujs event handlers to reset and autofocus a form

export async function resetOnAjaxSuccessEvent(event) {

const target = event.target

if(!target?.dataset?.resetOnAjaxSuccess){ return }

target.reset()

}

export async function focusOnAjaxSuccessEvent(event) {

const target = event.target

const focusTargetSelector = target?.dataset?.focusOnAjaxSuccess

if(!focusTargetSelector){ return }

const focusTarget = document.querySelector(focusTargetSelector)

focusTarget?.focus()

}

// ...

import { resetOnAjaxSuccessEvent } from 'practical-framework'

import { focusOnAjaxSuccessEvent } from 'practical-framework'

document.addEventListener(`ajax:success`, resetOnAjaxSuccessEvent)

document.addEventListener(`ajax:success`, focusOnAjaxSuccessEvent)

Other bits & bobs

CableReady’s documentation is pretty comprehensive. Certainly more comprehensive than the docs for most new Rails features since Rails 5. I don’t necessarily like the tone, but I’ll take memeified docs over no docs any day of the week.

One argument I’ve heard against CableCar is that sometimes the API doesn’t feel very Rails-like. I haven’t found that to be a significant deterrent, and that could be solved with refactoring/helpers/magic. Also, there are a few counterarguments:

- One ethos of Rails is to use sensible, easily understandable behavior in 90% of cases. CableCar is a protocol that does that.

- Rails also advocates for “using boring technology/patterns.” CableReady/CableCar are infinitely more boring than Turbo/Hotwire. 👀

- Browsers are complex systems, and using their universal mechanisms allows more developers to work on Rails apps and encourages the growth of the ecosystem. Much more than a bespoke frontend framework.

Musing: How much of an app really needs to be real-time?

As I was building this out, I conducted a very unscientific poll. The results aren’t even close to being statistically significant, but I still think they’re interesting!

From these results, my experience working & maintaining apps, and hearing what folks struggle with in their apps; the vibe is that going real-time-first only happens in demos & simple code.

I also asked Konnor Rogers, who has extensive frontend knowledge and is part of the Web Awesome team! And he agreed with my suspicions:

🌶️ the more I think about morphing, the more I think it's the wrong tool for the job.

— Konnor Rogers (@RogersKonnor) August 5, 2024

It's easy to end up with stale nodes, nodes that have event listeners they shouldn't, unless your server is recording every possible client interaction, it'll still wipe away client state. https://t.co/tJbuxLpkmb

I've written like 3 responses to this, but char limit is screwing me.

— Konnor Rogers (@RogersKonnor) August 5, 2024

morphing / preserving nodes has a place. Implicitly doing so is a super easy footgun that's hard to debug. View transitions should help solve this problem in the future. Explicit marking makes debugging easier

There's the other problem of it's impossible to stream HTML with morphing. (Well not impossible, but way more error prone, and behavior is bizarre)

— Konnor Rogers (@RogersKonnor) August 5, 2024

Turbo also requires full HTML responses before it can render a new page, leading to additional "slowness" if not cached

@jaffathecake has a great article from *checks notes* 8 years ago which is about Turbolinks, but still applies to Turbo.

— Konnor Rogers (@RogersKonnor) August 5, 2024

By needing the full response before you can render, you can actually make content slower particularly on unreliable networks.https://t.co/k9wA6xkUAf

Yes. It's a powerful tool with sharp edges, little documentation on the inner workings, and don't get me started on the meta tags. They feel like someone set some fucking tanagrams in front of me and was like "figure out the correct combination to fit your use case"

— Konnor Rogers (@RogersKonnor) August 5, 2024

my thoughts are very few features need realtime / reactive.

HTTP requests / responses cycles are well understood. Have great caching, work well with load balancing etc.

Realtime / reactive has an entirely different set of problems. You probably want optimistic updates, which means some kind of CRDT backing mechanism. Youll probably want local first which usually means a heavy client.

And this doesnt even touch on auth / accessibility. Realtime / reactive is hard to get right.

[…]

FWIW, Turbo is like 99% request / response. So little of turbo is real time / reactive. It’s almost entirely HTTP and like a tiny bit of web sockets for model broadcasts

[…]

where I think Turbo went wrong is the following:

- Links should only be able to make

GETrequests<turbo-stream>should be a<turbo-action>, stream is just too confusing. It makes people think they’re using a web socket when it’s really just a DOM operation- Turbo frames are clunky with managing ids. Scoped navigation as the default is equally weird.

- There’s like 40 different combo of meta tags. It’s so hard to keep them all straight.

Hearing someone else with a lot more experience mirror my thoughts helps me feel confident in my claims in this post.

Thinking in terms of complexity & the mismatch between technical and user expectations; users might not actually need truly real-time updates. At Blue Ridge Ruby, Brian Childress gave a great talk about managing complexity. And the biggest takeaway I got from that is how “real-time updates” actually means “8 AM, once a day” for clients in practice. I highly recommend watching it!

Given all of this; I do believe that the best approach for the majority of apps is:

Write progressively enhanced request/responses from the ground up, with the jackpot ideal being Websocket updates and the general assumption that your JS will be broken or not fully loaded.

Which is what you get with smart controller design & abstractions, UJS/CableCar, and a sprinkling of CableReady when it matters.

We’ve wasted a lot of time reinventing solved problems—arguably worse!

I don’t want to belabor this point too long because, frankly, it’s exhausting, demoralizing, and people with more knowledge of the internal politics than I do have already covered this.

UJS is such a lightweight tool, which is the direction that’s best as browsers do more and more. It slots in nicely with other browser APIs, like Web Components, and even heavier frontend frameworks. Unfortunately, it got dumped unceremoniously for a stack that’s significantly more complex. We were too quick to throw out UJS, which still has some excellent design decisions, and CableCar’s protocol works better than Turbo Streams for updates that can be progressively enhanced.

Turbo and Hotwire were latecomers. CableReady shipped years before them, and as I’ve (hopefully) shown, it solved the same problems better. So much so that Turbo/Hotwire 8 has enlisted some CableReady maintainers to get to feature parity with CableReady.

Even if they can get there (I doubt it; the CableReady approach is much more robust), the question is: why the hell are we doing that? Why don’t we just use the better framework, even if it didn’t originate from the Rails’ core? Why not have Rails ship with it by default, or hell contribute to it? DHH has no problem jettisoning frontend approaches between major versions (he’s done so multiple times!). There’s precedent for it.

The best thing to happen to Rails was when Merb renamed itself to Rails & gave developers a proper plugin architecture. You aren’t chained to Rails’ defaults, and we can communally choose the better tools without having Rails “bless it.” Because even if they do bless a frontend framework, that’s no guarantee for success, maintenance, or growth. So I’d argue we should choose the better tool. Community consensus is also a form of standardization.